by Simon Meacher | Jun 29, 2021 | DataAutomation, News, WhereScape

Engaging Data Explains:

Automated Deployment

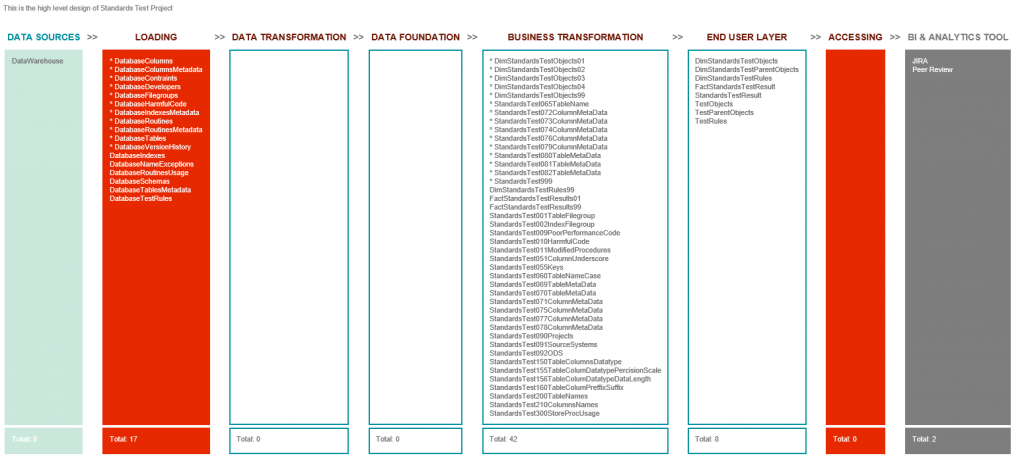

Continuous Delivery using Octopus Deploy

With WhereScape RED

Many companies are looking to make Code changes/deployment easier. Often the ability to deploy code to production is surrounded by red tape & audited control. If you don’t have this, count yourself lucky!

Jenkins & Octopus Deploy are two, to name a few (see here), that are helping to automate the deployment of code to production. Allowing companies to adopt a continuous deployment/delivery approach.

For a long time, WhereScape RED has had its own method of automating deployment, using the command line functions.

Why Automate?

Using tools such as WhereScape RED allow elements of automating deployments; however, we know that companies like to use a common toolset for their code deployments; like having a single picture of all the deployments and, in most cases, realise that they want to release multiple code deployments on different platforms because RED doesn’t do everything.

Git?

No problem! There are several ways to do this. Our perfered option is to push the deployment application to the code store respository. Afterall, it is more practical to store the changes you want to push to Production and not every change to any objects, including those that are not meant for Production!

Can I do This Now?

WhereScape RED uses a command prompt file to send commands to the admin EXE. All changes will be applied to the destination database (via ODBC). Installation settings/config is set using XML & a log file is created as part of the process. The XML file contains the DSN of the destination database. Let’s come back to this point later. The XML contains all of the settings that are applied when deploying the application. Settings like Alter or Recreate Job. Please make sure you have this set correctly. You do not want to re-create a Data Store table to lose the history!

Permissions are important. The key to running the command line to publish changes to production is that the service account executing the commands has permissions to change the underlying database.

Integration with Octopus

Octopus deploy uses PowerShell as it’s common execution code. So we have adapted all of our WhereScape BAT files to PowerShell in order to get everything working.

Building a list of repeatable tasks within Octopus is easy & provides an opportunities to create a standard release process that meets with your companies standards/processes. Tasks like database backup, metadata backup and much much more!

It can even run test scripts!

We used a PowerShell script to create a full backup of the database, to be used should the deployment fail. With a larger database, this may not always be the best solution. Depending on your environment set up you may have options to use OS snapshots or other methods to roll back the changes. The good news is Octopus Deploy works with most technology, so you should find something that works for your technology stack.

Recently, we been playing with creating rollback WhereScape applications on the target data warehouse. This is great for restoring the structure of the objects quickly and easily. Reducing risk is a great value add!

Go, Go, Go!

Triggering the deployment was easy, we could set this up in many ways, but used “does the application files exists” trigger to get things started – until the humans learned to trust the Octopus process.

However, linking the release to Jira is just as simple. Imagine, you’ve completed development and want to sent the code to UAT. You click the button to update the ticket…….wait a few seconds…..and the code is deployed! It’s complicated to set up, but you get the idea.

Final Thoughts

Octopus is a great tool and the automation really helps to control the process of deployments. Coupled with WhereScape automation, this provides and excellent end to end solution for data warehousing.

If you are interested in CI/CD and WhereScape RED/3D, book a call us and find out how it could help your team.

by Simon Meacher | May 28, 2021 | DataAutomation, News, WhereScape

Engaging Data Explains:

Documenting The Modern Day Data Warehouse

When you’re operating a modern-day data warehouse, documentation is simply part of the job. But it’s not necessarily the easiest or most logistically straightforward part of the process, while also being important. Documentation is, in fact, invaluable to the continued development, expansion, and enhancement of a data warehouse. It’s therefore important to understand everything that is entailed in adequately documenting, in order to ensure that your data warehouse processes run smoothly.

Understanding your Audience

One of the first things to understand is who you are compiling the documentation for. Support, developers, data visualisation experts, and business users could all be possible recipients. Before you answer this question, you really need to fully understand the way that your organisation operates, and open the lines of communication with the appropriate departments.

A two-way dialogue will be productive in this ongoing process. This process of communication will then help ensure that you keep the documents in line with the design. This is vitally important, as any conflicts here can render the whole process less than constructive than is ideal.

And it’s especially vital considering how fast documentation moves nowadays. Everything has gone online, and is based on Wiki. Whether it’s Confluence, SharePoint, or Teams, all sorts of Wiki documents are being produced by businesses with the intention of sharing important information. These shareable documents are updated with increasing regularity, meaning it is important to get your strategy in place before beginning.

Different approaches to data warehouse design can also impact the amount of time that a document is live before being updated. If you are lucky enough to make weekly changes to your data warehouse, you will be making incremental changes to the documentation itself. Development teams spend hours on updating the documentation rather than doing what they are good at….developing data solutions! Naturally, minimising this where possible is always preferable.

Self-Service Business Intelligence

Documentation is also crucial in self-service business intelligence. The integration of private and local data in this area, into existing reports, analyses or data models, requires accurate documentation. Data can be drawn in this area from Excel documents, flat files, or a variety of external sources.

By creating self-service functionality, business users can quickly integrate data into what can often be vital reports. Local data can even be used to extend the information delivered by data warehousing, which will limit the workload that is inevitably incumbent on data management. The pressure on business intelligence can be quite intense, so anything that lessens the load is certainly to be welcomed.

Another important aspect of documentation is that it reduces the number of questions that are typically directed at the IT and data warehousing teams. One thing anyone that works in IT knows only too intimately is the vast amount of pressure that can be heaped upon them by both internal and external enquiries. Again, anything that reduces this will certainly be favourable.

The data warehouse team also has huge responsibility within any organisation. They are required to produce a vast amount of information for front-end business users, and getting documentation right can certainly assist with this process.

Importance of Transparency

One important aspect of documentation that can sometimes be overlooked is the importance of transparency. This works on every level of an organisation, with the importance of sharing everything related to documents absolutely vital. Once this level of transparency is implemented, people who understand the data deeply can improve the documentation, or suggest changes to the Extract, Transform, and Load (ETL) and Extract, Load, and Transform (ELT), if this is indeed deemed necessary.

Conversely, it’s also important to understand that not all technology is suitable for documentation. As much as businesses and organisations would love this process to be completely holistic, this is not always possible.

Thus, packages such as Power BI, QlikView and QlikSense, and even Microsoft’s trusty Excel, are not necessarily ready to be documented. These software packages can use data, but often do not have the ability to provide a document set that explain how the data is being used, and for what purpose. Recently, Power BI has taken steps to ensure that the app can help with data lineage, but this remains better suited to IT teams, as opposed to Business Users.

Attempting to document data across multiple technologies is tricky, but Wikis can provide IT teams with the ability to collate all of this information into a central hub of knowledge, making access much more logistically convenient.

Conclusion

Ultimately, IT departments, data warehousing teams, and report developers should all be encouraged to produce documentation that contributes to the overall aims of their organisations. Anything excessively technical is not good enough for modern business requirements, especially considering the importance of communication, and of ensuring that everyone within an organisation is acquainted with as much vital data as possible.

Modern-day technology makes this goal a reality, and this means that it is increasingly an expectation of end-users. Failing to prepare properly in this area could indeed mean preparing to fail, as organisations will simply have failed to meet the compelling desires of the market. It is therefore vital for documentation to be dealt with diligently.

Getting this piece right, will go a long way to help with data governance!

If you would like to know more about how Engaging Data help companies to automate documentation, please contact us on the below.

by Simon Meacher | Jun 8, 2020 | News

Engaging Data & Girls Football

In 2019/20 season we sponsored Pace Youth‘s U16 Bobcats girls football team in Southampton playing in the Hampshire Girls Football League.

Instead of putting our logo on the shirt, we choose to support a charity Young Minds who are the UK’s leading charity fighting for children and young people’s mental health.

Engaging Data will continue to support the team & its charity Young Minds in going into the 2020/21 season!

Young Minds is a great place for parents and children to find support. If you are able to, please support this fantastic charity: Donate Here.

COVID-19 cut the season short, but we hope the team get back to playing football, safely. Who knows what football will look like next season, at a professional level or grassroots. We can’t wait to hear how the team get on.

Good Luck Bobcats!

by Simon Meacher | Jun 3, 2020 | News

Engaging Data & Redgate Partnership!

We are really excited to go into partnership with Redgate.

Using the right tools for the data job.

Find out more about them here. Redgate’s software can help from development to environment controls. Moreover, these tools are perfect for any data developer!

Redgate develops tools for developers and data professionals. These products are a natural fit for Engaging Data providing suitable tools to for data development teams and enhancing or streamlining processes. Redgate produces specialised database management tools for Microsoft SQL Server, Oracle, MySQL and Microsoft Azure.

All of these platforms and tools driven by Engaging Data Consultants create engaging data solutions.

Our consultants are already using Redgate software in a project to mask data for a data warehouse. As a result, we will share some of the challenges and how we’ve built robust solutions using Redgate’s tools!

If you would like to know more about the tools we use, or have a question about Redgates product, please get in touch!