The Problems Documenting a Data Warehouse

The Problem Documenting a Data Warehouse

More data is being collected, stored, and analysed than ever before. One of the digital age challenges is how and where we store all this data safely and accessibly.

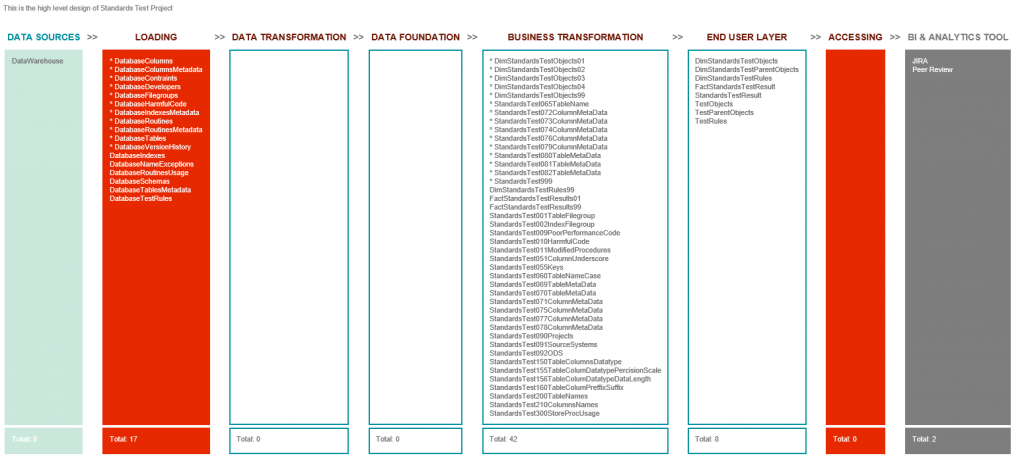

A modern Data Warehouse can solve many of these issues, using multi-tiered architecture to ensure different users with various needs can access the information they need. In order to expand and develop a Data Warehouse, documentation is invaluable.

Are you considering approaching a Data Warehouse using a documentation method? Then read on to find out more!

What is Documentation?

Data documentation is vital in many ways for a Data Warehouse, and it’s how you can ensure that your data will be understood and accessible by any user across your organisation. Documentation will explain how your data was created, its context, structure, content, and any data manipulations.

Documentation is crucial if you’re looking to continue developing, expanding, and enhancing your Data Warehouse. However, it’s essential to understand what documentation entails to ensure your Data Warehouse operates smoothly and its processes run smoothly.

Documenting a Data Warehouse

Like we said, the amount of data that we collect as store as organisations is increasing and traditional Data Warehousing that may be set up using a simpler database structure will often struggle to cope. Partially with the sheer volume of information it needs to store and analyse, it also needs to be accessed by various users, often in different ways. A document-based approach to data warehousing will allow for streamlining of data from multiple sources and multi-user access.

When documenting your Data Warehouse, you should begin with creating standards for your documentation, data structure names, and ETL processes, as this creates the foundation upon everything else is built. A robust and excellent Data Warehouse will have straightforward and understandable documentation.

A successful Data Warehouse implementation will often come down to the data solution’s documentation, design, and performance. However, if you can accurately capture the business requirements, then using documentation, you should be able to develop a solution that will meet the needs of all users across an organisation.

At Engaging Data, documenting a Data Warehouse has become second nature. Although it’s not necessarily the easiest or most logistically straightforward part of the process, it’s necessary to ensure your data warehouse processes run smoothly.

What Documentation do I need for a Data Warehouse project?

The exact pieces of documentation that you need may vary by your particular Data Warehousing project. However, these are some of the significant elements of documentation that you should have:

The Business Requirements Document

will outline and define the project scope and top-level objects from the perspective of the management team and project managers.

Functional/information requirements document

which will outline the functions that different users must be able to complete at the end of the project. This document will help you to focus on what the Data Warehouse is being used for and what different pieces of data and information the users will require from the data warehouse.

The fact/qualifier matrix

is a powerful tool that will help the team understand and associate the metrics with what’s outlined in the business requirements document.

A data model

is a visual representation of the data structures held within the Data Warehouse. A data model is a valuable visual aid to ensure that the business’s data, analytical and reporting needs are captured within the project. Plus, data models are helpful for DBAs to create the different data structures to house the data.

A data dictionary

is a comprehensive list of the various data elements found in the data model, their definition, source database name, table name and field name from which the data element was created.

Source to target ETL mapping document

which is a list focusing on the target data structure, plus defines the source of the data and any transformation that the source element goes through before landing in the target table.

What are the problems of Documenting a Data Warehouse?

Documenting a Data Warehouse can be a massive project, depending on the amount of data, the number of users that need access, and the business requirements. As the amount of data held within a Data Warehouse increases, management systems will need to dig further to find and analyse the data. This is especially an issue within traditional Data Warehouses, and as data volume increases, the speed and efficiency of a data warehouse can decrease.

Generally, spending time to understand and document your business needs will make documenting your Data Warehouse easier because Data Warehousing is driven by the data you provide. If you don’t take the time to map these critical pieces of information early in the process, you may run into problems later on. Similarly, the correct processing of your data and structuring it in a way that makes sense for your organisation today and in the future. If you don’t set yourself up for the future, structuring data becomes more complex and can slow down the processing as you add more information to your Data Warehouse. In addition, it can make it more difficult for the system manager to read the data and optimise it for analytics.

Overall, the better the initial documentation, planning, and business information model are, the easier your implementation process will be and make it easier to continue to add data to your warehouse. By carefully designing and configuring your data from the start, you’ll be rewarded with better results.

Another potential problem in documenting a Data Warehouse is choosing the wrong warehouse type for your business needs and resources. Many organisations will allow various departments to access the system, stressing the system and impacting efficiency. By choosing the right type of warehouse for your organisation and making a future-proofed decision, you can balance the usefulness and performance of your data warehouse.

Data Warehousing is an excellent system for keeping up with your business’s various data needs. By making many long-term decisions and preparing at the start, you can avoid many potential problems when documenting your data warehouse. However, you can prevent many challenges associated with data warehouse deployment and implementation by utilising a tool like WS Doc.

What is WS Doc?

WS Doc is a simple-to-use tool that automates a lot of the processes of documenting your data warehouse by automating the publication of WhereScape documentation to your choice of WIKI technology.

In addition, with WS Doc, you can collaborate on workflows, editing data sets and input, allowing various users to work on the project simultaneously. As well as integrating with other apps and systems, WS Doc makes collaboration and streamlined working possible.

Why was WS Doc created?

WS Doc sought to bring document automation and assembly to more industries, turning tedious and detailed work into automated processes and systems.

By allowing you to gather data and instantly generate template documents, even generating document sets from your data, you can save up to 90% of the time that you’d have spent on drafting documentation.

By automating the publication of WhereScape documentation to your choice of WIKI Technology (Confluence, SharePoint, GitHub, or something else), you’re providing your documentation with the power of the WIKI technology, allowing it to be easier to digest, apply, and share.

Overall, WS Doc streamlines and automates the process, speeding it up and making it less resource-heavy.

Want to learn more about WS Doc?

Click the button below. Everyone is on the same page with WS Doc.

Why Choose WS Doc?

In conclusion, by choosing WS Doc to document your Data Warehouse project, you’re utilising a simple tool to automate processes that otherwise would take a long time, as well as using a lot of resources, and that’s not even considering the possibility of human error in a process that requires a lot of detail and repetitive actions.

We’ve discussed some potential problems you can run into when documenting a Data Warehouse. However, with WS Doc you can overcome these issues because WS Docs is a tool that promotes effective communication and collaboration, engaging with the people using data. It saves time and resources by automating the publication and implementation of documentation. And finally, it ultimately enhances your existing toolset, offering a developed, streamlined, and simple-to-use experience.

Here’s at Engaging Data, we use WS Doc in the documentation of Data Warehouse projects we carry out for our clients.

If you’d want to learn more about the process or see if WS Doc could be the right tool for your organisation, schedule a call with us!